DeepStream

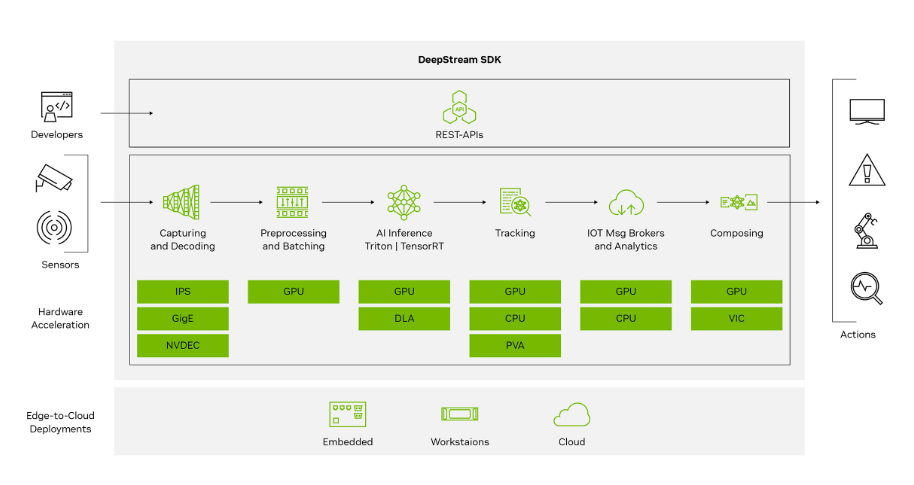

This guide explains how to install and run NVIDIA DeepStream SDK on Jetson Orin devices. DeepStream supports GPU-accelerated AI video analytics pipelines and is highly optimized for Jetson's CUDA/NvMedia platform.

1. Overview

- Real-time video analytics SDK provided by NVIDIA

- Accelerated with TensorRT and CUDA

- Supports multi-stream AI inference and object tracking

- Input sources include RTSP, USB, CSI cameras, and local video files

- Built-in object detection, classification, and tracking capabilities

This guide covers:

- Installation methods (.deb package and Docker)

- Running sample pipelines

- Integrating custom models

- Docker usage (including jetson-containers)

- Common issues and tips

2. System Requirements

Hardware

| Component | Minimum Requirement |

|---|---|

| Device | Jetson Orin Nano / NX / AGX |

| RAM | ≥ 8GB |

| Storage | ≥ 10GB |

Software

- JetPack 6.1 GA or later (L4T ≥ R36.4)

- Ubuntu 20.04 / 22.04

- CUDA, TensorRT, cuDNN (included in JetPack)

- Docker (optional, for containerized deployment)

3. Installing DeepStream

glib Migration

To migrate to a newer glib version (e.g., 2.76.6), follow these steps:

Prerequisites: Install the following packages:

sudo pip3 install meson

sudo pip3 install ninja

Compilation and installation steps:

git clone https://github.com/GNOME/glib.git

cd glib

git checkout <glib-version-branch>

# e.g., 2.76.6

meson build --prefix=/usr

ninja -C build/

cd build/

sudo ninja install

Verify the installed glib version:

pkg-config --modversion glib-2.0

Dependency Installation

sudo apt update

sudo apt install -y \

libssl1.1 \

libgstreamer1.0-0 \

gstreamer1.0-tools \

gstreamer1.0-plugins-good \

gstreamer1.0-plugins-bad \

gstreamer1.0-plugins-ugly \

gstreamer1.0-libav \

libgstrtspserver-1.0-0 \

libjansson4 \

libyaml-cpp-dev

Install librdkafka

- Clone the librdkafka repository from GitHub:

git clone https://github.com/confluentinc/librdkafka.git

- Configure and build the library:

cd librdkafka

git checkout tags/v2.2.0

./configure --enable-ssl

make

sudo make install

- Copy the generated libraries to the DeepStream directory:

sudo mkdir -p /opt/nvidia/deepstream/deepstream/lib

sudo cp /usr/local/lib/librdkafka* /opt/nvidia/deepstream/deepstream/lib

sudo ldconfig

Method 1: Install via SDK Manager

-

Download and install SDK Manager from the NVIDIA official website.

-

Connect the device: Use a USB-C cable to connect the Jetson Orin device to the host computer.

-

Launch SDK Manager: Run the

sdkmanagercommand on the host and log in with your NVIDIA developer account. -

Select target hardware and JetPack version: Choose the corresponding Jetson Orin device and appropriate JetPack version in SDK Manager.

-

Enable DeepStream SDK: Check the DeepStream SDK option under "Additional SDKs."

-

Begin installation: Follow the prompts to complete the installation.

Method 2: Using DeepStream Tar Package

-

Download DeepStream SDK: Visit the NVIDIA DeepStream download page and download the DeepStream SDK tar package for Jetson (e.g.,

deepstream_sdk_v7.1.0_jetson.tbz2). -

Extract and install:

sudo tar -xvf deepstream_sdk_v7.1.0_jetson.tbz2 -C /

cd /opt/nvidia/deepstream/deepstream-7.1

sudo ./install.sh

sudo ldconfig

Method 3: Using DeepStream Debian Package

-

Download DeepStream Debian: Visit the DeepStream Debian download page and download the DeepStream SDK tar package for Jetson (e.g.,

deepstream-7.1_7.1.0-1_arm64.deb). -

Install:

sudo apt-get install ./deepstream-7.1_7.1.0-1_arm64.deb

Method 4: Using Docker

-

Install Docker and NVIDIA Container Toolkit: Ensure Docker and NVIDIA Container Toolkit are installed.

-

Pull the DeepStream Docker image:

docker pull nvcr.io/nvidia/deepstream-l4t:6.1-samples

- Run the container:

docker run -it --rm --runtime=nvidia \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-e DISPLAY=$DISPLAY \

nvcr.io/nvidia/deepstream-l4t:6.1-samples

Alternatively, use the community-maintained jetson-containers:

jetson-containers run dusty-nv/deepstream

Installation Verification

Check version information:

deepstream-app --version-all

Expected output:

deepstream-app version 7.1.0

DeepStreamSDK 7.1.0

CUDA Driver Version: 12.6

CUDA Runtime Version: 12.6

TensorRT Version: 10.3

cuDNN Version: 9.0

libNVWarp360 Version: 2.0.1d3

4. Running Examples

Step 1: Run Default Example

- Navigate to the configs/deepstream-app directory on the dev kit:

cd /opt/nvidia/deepstream/deepstream-7.1/samples/configs/deepstream-app

- Run the reference application:

# deepstream-app -c <path_to_config_file>

deepstream-app -c source30_1080p_dec_infer-resnet_tiled_display_int8.txt

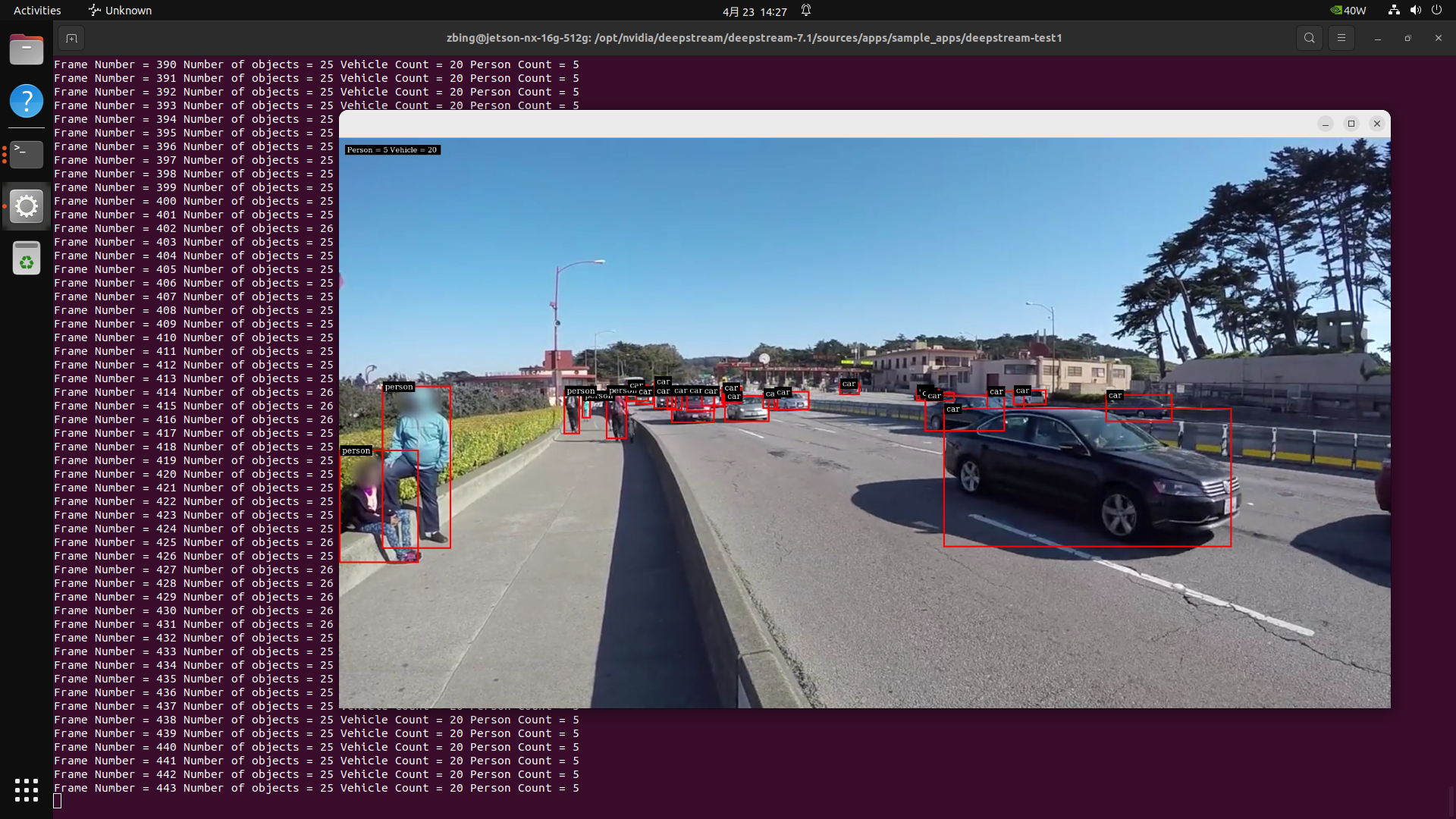

This command will pop up a video window displaying real-time detection results:

Step 2: Using USB or CSI Camera

Modify the input section in the configuration file:

[source0]

enable=1

type=1

camera-width=1280

camera-height=720

camera-fps-n=30

Then run:

deepstream-app -c <your_camera_config>.txt

🎥 USB cameras use

type=1, while CSI cameras usenvarguscamerasrc.

Step 3: Using RTSP Stream

Use the following configuration snippet:

[source0]

enable=1

type=4

uri=rtsp://<your-camera-stream>

Step 4: Video Detection

Navigate to the example folder:

cd /opt/nvidia/deepstream/deepstream-7.1/sources/apps/sample_apps/deepstream-test1

Compile the source code:

sudo make CUDA_VER=12.6

Run:

./deepstream-test1-app dstest1_config.yml

For more source examples, see /opt/nvidia/deepstream/deepstream/sources.

5. Integrating Custom Models

DeepStream supports integrating custom models via TensorRT or ONNX.

Step 1: Model Conversion

Use trtexec or tao-converter:

trtexec --onnx=model.onnx --saveEngine=model.engine

Step 2: Update Configuration File

[primary-gie]

enable=1

model-engine-file=model.engine

network-type=0

For more DeepStream TAO examples, visit https://github.com/NVIDIA-AI-IOT/deepstream_tao_apps.

6. More Examples

7. Tips and Troubleshooting

| Issue | Solution |

|---|---|

| No display in Docker | Mount X11 socket and set DISPLAY variable |

| Low frame rate | Use INT8 engine or reduce input resolution |

| USB camera not detected | Check devices with v4l2-ctl --list-devices |

| GStreamer errors | Verify plugin installation or reflash JetPack |

| RTSP latency/dropped frames | Set drop-frame-interval=0 and latency=200 |

8. Appendix

Key Paths

| Purpose | Path |

|---|---|

| Sample config files | /opt/nvidia/deepstream/deepstream/samples/configs/ |

| Model engine files | /opt/nvidia/deepstream/deepstream/models/ |

| Log directory | /opt/nvidia/deepstream/logs/ |

| DeepStream CLI tool | /usr/bin/deepstream-app |